Page 694 - Emerging Trends and Innovations in Web-Based Applications and Technologies

P. 694

International Journal of Trend in Scientific Research and Development (IJTSRD) @ www.ijtsrd.com eISSN: 2456-6470

Data Collection

The success of the Fake Logo Detection System relies heavily on the quality and diversity of the dataset used for training the

model. The first step involves collecting a comprehensive dataset of both real and counterfeit logos from various industries,

such as technology, fashion, food, and entertainment. This diversity ensures that the model can detect fake logos across

multiple sectors.

The dataset will be sourced from online repositories, brand databases, and potentially through partnerships with companies in

brand protection. It will include logos with varying designs, colors, fonts, and orientations, as well as counterfeit logos altered

in common ways, such as modified shapes, resized elements, or distorted text.

To ensure the dataset's quality, images will undergo preprocessing, which includes resizing, normalizing pixel values, and

applying data augmentation techniques like rotation and flipping. These steps help prevent overfitting and ensure the model

can generalize effectively.

In summary, the data collection phase aims to gather a large, diverse set of real and fake logos to train the system effectively,

providing a robust foundation for accurate fake logo detection.

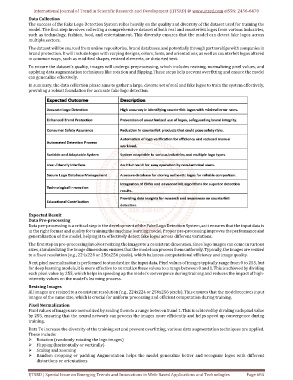

Expected Result

Data Pre-processing

Data pre-processing is a critical step in the development of the Fake Logo Detection System, as it ensures that the input data is

in the right format and quality for training the machine learning model. Proper pre-processing improves the performance and

generalization of the model, helping it to effectively detect fake logos across different variations.

The first step in pre-processing involves resizing the images to a consistent dimension. Since logo images can come in various

sizes, standardizing the image dimensions ensures that the model can process them uniformly. Typically, the images are resized

to a fixed resolution (e.g., 224x224 or 256x256 pixels), which balances computational efficiency and image quality.

Next, pixel normalization is performed to standardize the input data. Pixel values of images typically range from 0 to 255, but

for deep learning models, it is more effective to normalize these values to a range between 0 and 1. This is achieved by dividing

each pixel value by 255, which helps in speeding up the model’s convergence during training and reduces the impact of high-

intensity values on the model’s learning process.

Resizing Images

All images are resized to a consistent resolution (e.g., 224x224 or 256x256 pixels). This ensures that the model receives input

images of the same size, which is crucial for uniform processing and efficient computation during training.

Pixel Normalization

Pixel values of images are normalized by scaling them to a range between 0 and 1. This is achieved by dividing each pixel value

by 255, ensuring that the neural network can process the images more efficiently and helps speed up convergence during

training.

Data To increase the diversity of the training set and prevent overfitting, various data augmentation techniques are applied.

These include:

Rotation (randomly rotating the logo images)

Flipping (horizontally or vertically)

Scaling and zooming

Random cropping or padding Augmentation helps the model generalize better and recognize logos with different

distortions or orientations.

IJTSRD | Special Issue on Emerging Trends and Innovations in Web-Based Applications and Technologies Page 684